KaurKuut.com

My thoughts, online.Welcome to the world of microbenchmarks

Feb 4, 2017

Microbenchmarks have been popular for as long as I can remember. Recently some extra ridiculous HTTP request handling ones have been making the rounds.

A million requests per second with Python

(More than) one million requests per second in Node.js

Over a million HTTP requests/second with Node.js & Python. Only 70k with ngnix, and an embarrassing 55k with Go. What is going on here? Is Google asleep at the wheel?

On the internet nobody knows you're a dog. Similarly nobody knows that your microbenchmark results are misleading at best.

First off these specific tests use HTTP/1.1 pipelining. There's not a single actively developed browser in existence that supports HTTP/1.1 pipelining out of the box. Thus you certainly can't use this for web development. Secondly, as is the norm for microbenchmarks like this, they are doing all these millions of requests from a single client that's located on the same machine. How many real world use cases are there where a single localhost client will do a million requests per second and also supports HTTP/1.1 pipelining?

I've seen people claim that Not totally useless. This shows the performance and overhead of the library/framework not the task. [...] I usually take it to mean divide stated performance by 10 immediately if a simple DB query is involved, etc.

Microbenchmark results don't linearly scale to everything else. Just because language X can print "Hello, World!" 2x faster than language Y doesn't mean that every other operation is also 2x faster. For example a huge factor is algorithm quality. Language X may be fast at "Hello, World!", but then proceed to have QuickSort as its standard sorting function, while language Y has Timsort. [1] Language X may have a nicely optimized C library for hashing, while language Y has AVX2 optimized ASM. Some languages don't even have a wide & well-optimized standard library. Thus you can only really tell how good a language/library is for your use case if you test with an actual real world scenario.

Additionally, the ultimate microbenchmark winning code is one that does every trick in the book while not caring about anything else. This means hooking the kernel, unloading every kernel module / driver that isn't necessary for the microbenchmark, and doing the microbenchmark work at ring 0 with absolute minimum overhead. Written in ASM, which is implanted by C code, which is launched by node.js. Then, if there's any data dependent processing in the microbenchmark, the winning code will precompute everything and load the full 2 TB of precomputed data into RAM. The playing field is even, JVM & Apache, or whatever else is the competition will also be run on this 2 TB RAM machine of course. They just won't use it, because they aren't designed to deliver the best results in this single microbenchmark. The point is that, not only don't microbenchmark results mean linear scaling for other work, but the techniques to achieve the microbenchmark results may even be detrimental to everything else!

These not-real-world microbenchmarks are definitely useless from an engineering perspective. However they aren't completely useless. Their use is marketing. It gets the word out to developers that this product X is really good! So what if it doesn't translate to real world scenarios or even if the numbers are completely fabricated and you can't even reproduce this under lab conditions. Very few people care enough to look at things that closely. Just seeing a bunch of posts claiming product X is really good is enough to leave a strong impression that product X really is that great. Perception is reality, and perception is usually better influenced by massive claims (even if untrue) rather than realistic iterative progress. Our product is 5% faster than state of the art! just doesn't have that viral headline nature that you need to win over the hearts of the masses.

The RethinkDB postmortem had a great paragraph about these microbenchmarks. People wanted RethinkDB to be fast on workloads they actually tried, rather than “real world” workloads we suggested. For example, they’d write quick scripts to measure how long it takes to insert ten thousand documents without ever reading them back. MongoDB mastered these workloads brilliantly, while we fought the losing battle of educating the market.

As for why someone would perform & propagate these microbenchmarks. Maybe they don't know better, or maybe they are doing it because they have decided to invest in some technology tribe and thus profit from that tribe surviving, and even more from growing. This is a pretty automatic behavior for humans. Take any tribal war, e.g. XBox One vs PS4. Those who happen to own a XBox One (perhaps as a gift) can be seen at various places passionately arguing that XBox One is better than PS4, even if objectively it has slower hardware and fewer highly acclaimed exclusive games. The person is on the XBox One tribe, and working towards getting more users to own XBox One will mean that more developer investments are also made towards the XBox One thanks to the bigger user base. Thus even if the original claims to get users into the tribe were false, if growth is big enough it may work out well enough at the end.

Concluding in terms of microbenchmarks, be sure to apply almost zero value to their results. The only exception should be when you already have a working application and you have profiled it and identified the hotspots. Perhaps more than 50% of your request handling CPU time is spent on computing some hash. Now you know that finding the fastest hash implementation will actually have a meaningful impact on your system.

However even then it may not be worth taking the time to deal with it. Talking to users and finding out what problems they have and solving those problems will lead to more success every single time. Unless your users are complaining that performance sucks, odds are you don't need to spend your resources on improving performance and instead need to spend it on actual problems your users are having.

Electronic Arts Hates Strong Passwords

Jun 26, 2011

On the midnight of June 25 LulzSec released yet another truckload of user/password combinations, including from the Electronic Arts game Battlefield Heroes. I used to play Battlefield Heroes as I am a huge Battlefield fan since the dawn of the series. Interested to see whether I am present in the hacked database, I immediately downloaded the CSV file and searched for my account name. Sure enough, there I was along with a familiar looking hash. I quickly threw the hash into a MD5 hash-to-source dictionary service. Boom! Unsalted MD5 and my password was staring me into my face. It was an old password of mine, one of the first I had chosen after upgrading from short all lower case passwords. This meant that I didn't need to panic, as I have different passwords in most places. Still, it was my password for Battlefield Heroes and more crucially it was also the username/password combination for my EA account, which controlled a bunch of other games like Battlefield: Bad Company 2. I quickly set myself a goal to change the EA account password so I launched Origin. Origin is EA's quarter-assed wannabe clone of Steam. I carefully looked around, into every corner and sub-dialog I could find. There was no password changing option. Amazed at how bad Origin is I went straight to EA.com as surely there must be a way to change my password there. Nope, even less account configuration than Origin provided. I then proceeded to log out from EA.com and claim I had lost my password. This worked fairly well until I tried to actually set a new password.

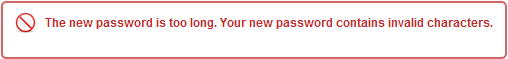

What the fuck? Flabbergasted, I decided to log back in with my old password and dig around the help section to find some documentation on what sort of passwords they allow, as the error is very opaque. While looking around in the help section I stumbled upon a My Account link. Yes, I had finally found the web based account management section, nicely hidden away from users. I tried to change the password again, this time from the account management page.

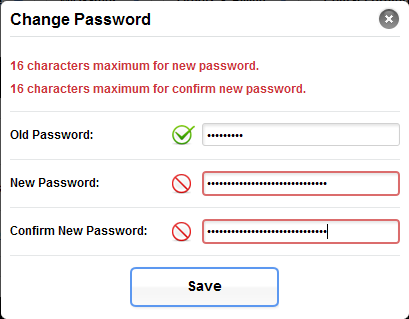

At least this error tells me the exact length limit, but 16 characters? A maximum of sixteen characters? This must be a joke.

OK, I tried with a shorter password then.

Invalid characters? It was getting ridiculous. I decided to do some research with some of the symbols that I could easily access on my keyboard. Here are the results:

Allowed: ! # % & ( ) = ` ' + ; : - _ < > [ ] { } $ @ *

Not allowed: " ¤ / ? ´ ˇ ~ , . | \ £ € § ü õ ö ä ž š

What about the minimum limits?

I tried changing my password to asd. No luck, 4 characters minimum! What about cool? Success!

In summary, EA doesn't want people using strong passwords like y756V3~J|d242~s89k75;37x-544df. It also doesn't want people using cute but decent passwords like ´[~.~]`. It is completely acceptable however to use passwords like cool, pass, love. Also the passwords will be stored using unsalted MD5 in easily hackable servers. Amazing.

Bitcoin or how I made 400 euros in two months

May 24, 2011It was a cold evening on February 14, 2011 when I heard about a service called compute4cash that promised to pay US dollars in exchange for letting them run some calculations on my machine. Their software didn't require administrator privilges so I carefully started to try it out. After all, I could use some extra cash flow! I occasionally ran the program during the next two weeks, totalling at 62 hours of actual runtime on Feb 28th when I finally made a withrawal for $11.41. It worked! Not exactly the road to riches but still a reasonable sum for basically doing nothing. I continued to run the program at nights, but on the 5th of March I decided to investigate what the hell am I actually calculating and how much is this calculation worth for the organizers? After a few minutes of googling I found out that compute4cash is actually a rebranding of this thing called bitcoin.

So bitcoin is a digital currency and one of its main selling points is that it is decentralized. No single body has total authority, instead the network as a whole decides what is ok and what is not. Bitcoin is also semi-anonymous, allowing you to create new sender/receiver addresses for every transaction.

At its current state bitcoin is nowhere near ready for prime time. Both due to lack of reach and due to the cumbersome/non-existant software. I would pretty much ignore bitcoin if it would be just a regular currency replacement. Luckily it has more going for it. Namely the technical and economic architecture is brilliant. Bitcoin is designed in such a way that there will ever only be a potential maximum of 21 million coins available. These coins however are not given out all at once nor at completely random. Instead it is possible to take part in the bitcoin lottery and the lottery tickets can be purchased using computing power. Basically you run a program that tries to solve a difficult one-way math problem. By one-way I mean it is difficult to compute but trivial to verify. Your chance of solving this problem is completely random but if you do you have essentially won the lottery and get 50 coins. The more computing power you throw at this problem the more probable it gets that you will solve it. However the bitcoin network will adjust the difficulity of the problem after every 2016 solutions. The goal of the network is to adjust the difficulity so that 2016 solutions get found every two weeks. This difficulty adjustment is necessary to not exhaust the maximum supply of 21 million coins before the year 2140. In addition to that, the number of bitcoins you get by solving a problem is halved every 4 years. So the essence of bitcoin is scarcity, similar to gold. Even the mathematical problem solving to win some coins is called mining by most.

Originally it was common place to mine coins with a CPU. However it didn't take long for GPU optimized programs to show up. A GPU can solve this specific math problem much faster than a CPU. As more people moved on to GPU mining it quickly became clear that CPU mining was just wasting energy and no match whatsoever to powerful GPUs.

So here I was with my AMD Radeon HD 5850, just fresh off compute4cash and ready to cut the middle man. I quickly installed the official bitcoin client and a custom GPU miner and started mining. Luckily I solved my first block only two days after starting. This was most likely a key factor to keep me hooked and carry on. I mined and mined up until about the 7th of May. There had been a major influx of new miners and as such the difficulity had risen dramatically. At that point I decided to join a pool. Mining in a pool basically means that a group of people all try to solve the same problem and then share the gains proportionally based on how much computing power everyone provided, with the pool organizer taking a small cut for himself. I was willing to take a hit on my profits in regards to the pool tax because in return I received a much more stable income.

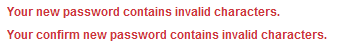

Now this is all great but I'm not running my GPU on full power for just a bunch of bitcoins. This is where Mt. Gox comes in. Mt. Gox is the premiere bitcoin exchange where people go to trade coins for USD. The exchange is not liquid at all and the volumes are really low. However still there are a bunch of speculators who are willing to buy and sell bitcoins for USD. I'm not interested in speculating on the market itself so I've cashed out at pretty random times at whatever price the coins would sell for.

18.04.11 150 BTC => 115.00 €

05.05.11 50 BTC => 109.49 €

07.05.11 50 BTC => 121.69 €

15.05.11 10 BTC => 44.54 €

22.05.11 10 BTC => 43.98 €

Total: 434.70 €

That's quite good for about 2 months of not playing graphically demanding games. It's really fuzzy what the future will bring to bitcoin so I wouldn't dare to invest any substantial amount that otherwise would go to good use elsewhere. However the money I've made thus far with bitcoin I'm willing to reinvest right back into the action and as such I've bought myself some new hardware that will play a role as a dedicated bitcoin miner. Having a quite limited budget I was determined to squeeze out as much performance per euro as possible. I did a lot of research on what GPUs can do how many calculations per second. Also the bitcoin problem involves integer mathematics not floating point, so I needed to only compare AMD cards and could completely ignore nVidia products. Having a decent understanding of the performance of a set of cards, I then gathered info on their power consumption and prices. Using all this info I generated some nice cost/benefit tables. Bitcoin mining doesn't really use any CPU power so basically any CPU would do. However it might be more beneficial to put multiple GPUs inside a single setup. So I looked at several different motherboards (some can fit even 5 GPUs!). Having multiple GPUs inside a single setup would require a lot of power and some excellent cooling, especially as I wanted to overclock the cards. Researching power supply units turned out to be a really intriguing experience. In the past I've never opted for the cheap ones because I knew that they suck but I didn't really know why they suck. Also with all the computers I've built in the past (tens of them) I've never really calculated the exact power needs of the components and how that power would be delivered and at what realiability and efficiency. Instead I've taken the easy road and just bought a high end model that will absolutely be more than I could use. This time however I was on a tight budget and building a very specific purpose machine that would be running in extreme circumstances so I couldn't just buy an over-the-top PSU nor a random low end one. On top of everything else I found out that even high end manufacturers lie about their products. For example Corsair likes to rate their PSUs at 30 degrees celsius, which isn't exactly a realistic temperature for heavy load usage.

After deciding on what components I need to buy I started scouting around my local used computer parts forum and found some pretty decent deals and the rest I bought new.

| CPU | AMD Athlon 64 X2 3800+, 2.0 GHz, Socket AM2 | 1 | 60.00 € |

| CPU cooler | Zalman CNP9500AM2 | 1 | |

| Motherboard | MSI K9N SLI Platinum | 1 | |

| RAM | Corsair 1GB XMS2 Extreme, DDR2, 800 MHz | 2 | |

| GPU | Gigabyte Radeon HD 5850 OC | 1 | 215.00 € |

| GPU | Club3D Radeon HD 5850 | 1 | |

| Storage | Kingston 8GB USB DataTraveler 100 G2 | 1 | 11.55 € |

| PSU | SeaSonic S12II-520 Bronze 520W | 1 | 61.50 € |

| Case | Antec Three Hundred | 1 | 58.30 € |

| Extra cooling | Nexus REAL SILENT 120mm D12SL-12 | 3 | 21.60 € |

| Dust filter | XILENCE 120mm case fan filter | 1 | 0.35 € |

| Total | 428.30 € | ||

If bitcoin mining continues to be profitable then this dedicated miner should serve me well and if not then I'm still OK because I bought it with money I got from bitcoin in the first place. However I'm a small fish in a big ocean of bitcoin miners and so as a bonus here is a video of a guy who got serious much earlier than me.

Google App Engine is a classic bait-and-switch scam

May 11, 2011Back in October 2009 I was roaming the web, reading everything I could on cloud computing. I was in the middle of development of Repel for DotA-GC and it needed a web service component. The $9/month HostGator shared hosting plan DotA-GC had at the time wasn't going to cut it. One option was to get a regular dedicated server, but even the cheap HostGator dedicated servers were around $200/month. Not exactly a bargain, especially when compared to the $1/month AdSense revenue that the DotA-GC website was pulling in.

Cloud computing was just starting to gain some real buzzword status and I like cutting edge technology so I looked into it.

The first offering I looked at was Amazon EC2. To me it looked like it's just an expensive way to buy dedicated servers. There wasn't really much added value there and now I can put a fancy word on that - EC2 is infrastructre as a service (IaaS). I was more interested in platform as a service (PaaS), so I looked further.

I found salesforce, but I didn't really understand what they were offering. Actually, to this very day I'm not qutie sure. This most likely means that whatever they offer isn't even related to my needs.

Then I found Microsoft Azure. C#? SQL? I was hooked! Most of the DotA-GC system was built with C# and MSSQL at the time, so it seemed like the perfect match. I looked around, watched some videos, read some articles - everything looked great, including the pricing. That is, until I found a thread on the Azure forums that explained something that wasn't even mentioned with an asterisk on the official pricing page. The minimum monthly price would be quite high (ca $100/month) because an instance would need to be running 24/7. This wasn't really on-demand scaling like they were advertising so yet again I had stumbled upon a service that is more like a dedicated server than some magic cloud scale.

I was starting to wonder what the hell all this cloud computing buzz is about? However I didn't give up just yet and looked around some more and then it happened. I discovered a really obscure cloud computing service, offered by Google of all companies - Google App Engine (GAE). GAE didn't have a minimum monthly price, in fact it had a really nice free daily quota (6.5 CPU hours, 1GB storage, 1GB bandwidth). More importantly though, there was no need to pay for idle time as the instances were fired up on-demand automatically by the system and I would be charged only for actual usage. I did some calculations and basically I concluded that I can run my service for free on GAE, while on Azure and the like it would cost me $100/month. If my application usage would grow then the costs would be similar to other offerings, but until then I wouldn't have to worry too much about income streams.

What about downsides? I would have to learn to design systems that fit the GAE philosophy and also learn a new language, either Python or Java. Because I had a lot of experience with C++ and C# and porting old code would be easier to Java, I chose Java. I would have to lock myself into GAE and in return I would get a lot of nice features and a startup friendly pricing plan. Plus it was Google, and Google is awesome right?

That's what I thought and everything was going relatively well for 18 months straight. In September 2010 I even started building a totally new web based system for DotA-GC on top of GAE. Unfortunately all good things come to an end and on May 10th 2011 during Google I/O the new pricing plans for GAE were announced and I quote: "In order to become an official Google product we must restructure our pricing model to obtain sustainable revenue.". Now I could maybe even accept a 2x or 3x price increase because the service is quite awesome, but this is not the case.

Lets calculate the current DotA-GC web server costs with the new pricing and compare it to the old one. (Raw numbers for May 10, 2011)

Currently everything fits inside the free daily quota, except blobstore storage for which I have to pay $0.02/day, or $0.60/month.

With the new pricing the breakdown would be:

$09.00 flat fee per month

$04.80 2 extra instance hours per day for a month

$23.10 datastore operations

$02.10 channels opened

--------

$39.00/month

$39/month is 65 times more expensive than $0.60/month. Sixty-five times more expensive. This is ridiculous. The increase will be even higher for Python applications, because every additional active instance is now charged for ($0.08/h or $57.60/month). All my GAE applications are written in Java and so my blow is somewhat softened by multi-threading the requests into the initial free instance.

They completely removed the old CPU usage based pricing and added a bunch of pricing meters that I haven't optimized for. A lot of my CPU usage optimization investments effectively down the drain. This really makes me all warm and fuzzy inside. To be fair, I could probably cut the new price in half with a lot of new clever optimizations. However this will require quite a bit of investment from my part that I otherwise wouldn't have had to do. Not to mention, even the halved price of $19.50/month isn't that great as it's still a 33x increase over the old one. I could get much more capacity out of $20/month if I would go with an alternative service, and there are a truckload of new competitors in this space now.

It could be argued that the additional value of GAE (it's a PaaS, not an IaaS after all) is worth the high price. This may be true and maybe I could even trick myself into paying this higher price if my application would have the revenue to pay for it. However it does not and this brings out the most crucial change in the new pricing plan in my opinion. It is a merger of the GAE for Business pricing and the old regular pricing, and the result is biased heavily towards enterprise. GAE is no longer startup friendly, not ramen noodles startup friendly anyway. The new free quoatas are a small fraction of what they used to be and the initial step to a payed plan is a steep one. Perhaps not as steep as Azure, but steep nevertheless.

This whole situation feels like Google designed the original preview prices to attract a lot of startups and single developers who are willing to take the risk of an untested platform. Now that GAE is throughly tested and fairly stable with the high replication datastore, they changed the prices to match enterprise budgets and the no longer necessary testers need to cough up the dough or fuck off.

It has been almost 24 hours since I first learned of these price changes and I haven't really thought about anything else all this time. This really shakes up my situation. I either need to quickly find additional income streams for my applications, shut them down or take on the suicidal task of redesigning the systems for alternative platforms. Those choices all kind of suck, so to end on a brighter note I can say that I learned a valuable lesson. I should always minimize dependencies on specific external services and products as much as possible.

iPhone 4 vs Galaxy S, i.e. choosing my first smartphone

Aug 22, 2010I have never owned a smartphone, but now I may be finally taking the plunge. I have narrowed down the choice to two top contenders, the Samsung Galaxy S and the Apple iPhone 4. I am now going to compare these two phones with the goal of eventually selecting one for my personal use. Also, I should note that I live in Estonia and thus not all points I bring up in this article will be the same in some other regions.

Lets take a look at the big and obvious first, the displays. The Galaxy S has a 4" Super AMOLED display (manufactured by Samsung), which is an evolution of the AMOLED display you can find on devices such as the Google Nexus One. An AMOLED display requires no backlight, so for black pixels there is no need to use power at all and this results in efficient power usage and excellent contrast ratios. Because every pixel emits its own light the viewing angles are exceptionally good, with no color change even at 90 degrees. AMOLED also offers very fast response times, potentially even less than 0.01 ms, compared to 1 - 5 ms for most LCDs. One of the weaknesses of AMOLED is the inability to use it in direct sunlight. Super AMOLED addresses this issue directly, with Samsung claiming that compared to a regular AMOLED display the Super AMOLED has 80% less sunlight reflection, 20% brighter screen and consumes 20% less power.

The iPhone 4 has a 3.5" IPS LCD (manufactured by LG). It's an old and refined technology that can be produced easily in big numbers. It has a contrast ratio of 1:800, which is laughable compared to the Galaxy S Super AMOLED. The viewing angles are better than on older iPhone screens, but not at Super AMOLED levels. The IPS LCD does not function too well outside either. There are no iPhone 4 demo videos available at the time I'm writing this, but the iPad also has a IPS LCD and there are some demo videos available that showcase its inability to properly function in direct sunlight. There is also a LCD (Sony Ericsson XPERIA X10) vs AMOLED (HTC Desire) vs Super AMOLED (Samsung Galaxy S) sunlight test video available. My theory here is that Apple chose the IPS LCD screen either because of it's low price or more likely because Samsung was unable or unwilling to provide such a huge amount of Super AMOLED displays that Apple requested. Luckily Apple didn't give up so easily and made the best of the bad situation by greatly increasing the resolution. The iPhone 4 3.5" screen has a whopping 960x640 resolution, which results in 326 dpi. Apple is marketing this as the Retina display, claiming that the human eye won't be able to see the individual pixels from 30cm away from the screen. This has already been disuputed by numerous people. However, regardless if I can see the pixels, it's a big step towards a better image quality.

What about the Galaxy S Super AMOLED? It has a resolution of 800x480. Or has it? Actually 653x392 would be a more appropriate number, because the Galaxy S Super AMOLED display utilizes the PenTile technology, like the Nexus One.

The iPhone 4 screen has 4 layers: Glass, touch sensetive panel, TFT, backlight. The Galaxy S screen has 2 layers: Glass, Super AMOLED. Having a thinner screen gives an interesting effect, with the pixels almost poping out of the phone.

But wait, does that mean the Galaxy S does not have a touch screen? No, actually it's built in to the Super AMOLED. And not just any touch panel, but the Atmel maXTouch mxt224 sensors. These same sensors can also be found on the HTC Incredible and the HTC EVO 4G. There is a video of these superior sensors in action, featuring the Galaxy S vs the HTC Desire. The iPhone 4 has also upgraded its touch screen technology from the 3GS, now using a TI 343S0499 controller. I have no comparsion information between the iPhone 4 and Galaxy S touch sensetivity but I guess they are not too far a part.

At the end of the day, the Galaxy S Super AMOLED display is better for images, video and gaming while the iPhone 4 IPS LCD is better for text.

3G Radios. Both phones support 900MHz and 2100MHz bands for my europe based consumption. HSDPA 7.2Mbit/s, HSUPA 5.76 Mbit/s for both phones. The iPhone 4 uses its main frame as the antenna, which in theory should give a significant advantage over the Galaxy S. Real life results however have resulted in a huge outcry. Many iPhone 4 owners are saying that they drop calls when they touch certain parts of the frame. I don't think this is as huge of an issue as the press is making it out to be.

Other connecitivy. Both phones have a FM radio receiver, but only the Galaxy S has software that actually implements this. Apple most likely skipped using this feature in order to drive more sales towards its iTunes music store. For bluetooth, the iPhone 4 has Bluetooth 2.1 + EDR, while the Galaxy S has Bluetooth 3.0. I couldn't care less either way, might as well omit the feature. For WiFi, 802.11 b/g/n for both the iPhone 4 and Galaxy S, although the iPhone 4 does not support the 5GHz band for 802.11n. The Galaxy S supports tethering over WiFi, meaning you can share your 3G data connection over WiFi to your other devices. 3.5 mm TRS audio socket for both. For the Galaxy S the socket also doubles for RCA to provide SD composite video out. On the iPhone 4 there is the need to buy special apple docks & cables but it is possible to get SD video out to work. The Galaxy S also supports DLNA, which is basically a standard for sharing media wirelessly with other DLNA supporting devices (e.g. Sony PS3, Windows 7 PC). The Galaxy S also has a micro-USB 2.0 socket that can be used to connect to a PC and use the phone as a storage device. The iPhone 4 does not have a micro-USB socket, but an adapter to USB is available. The iPhone 4 can be used as a storage device only with special drivers and this functionality is not supported by Apple.

Gadgets. Both phones have an accelerometer, a proximity sensor, an ambient light sensor, a vibrator, a compass and an A-GPS. The iPhone 4 comes ahead by also having a gyroscope.

Performance. The iPhone 4 has the Apple A4 chip (which is ironically manufactured by Samsung), while the Galaxy S has the Hummingbird chip (also manufactured by Samsung). It turns out however that both of these chips have an identical Intrinsity's FastCore modification of the ARM Cortex A8 1GHz CPU. This is the fastest mobile CPU available at the time of this writing, beating even the mighty Qualcomm Snapdragon that is used in phones like the Google Nexus One and HTC Incredible. Where the A4 differs from the Hummingbird is the GPU. The A4 has a PowerVR SGX 535, while the Hummingbird has the PowerVR SGX 540, which Samsung claims is three times faster. In terms of RAM, both phones have 512MB.

Storage. The iPhone 4 comes in two flavors, 16GB and 32GB. The Galaxy S similarly offers two options, 8GB and 16GB, although it also supports a microSD card up to 32GB.

Power. The iPhone 4 has a built-in 1420mAh Li-Pol battery that provides 14 hours of 2G talk time, 7 hours of 3G, 10 hours of WiFi, 10 hours of video playback, 40 hours of audio playback, 300 hours of standby. The Galaxy S has a changable 1500mAh Li-Pol battery that provides 13 hours of 2G talk time, 7 hours of 3G, 7 hours of video playback, 680 hours of standby.

Camera. Both phones have a 5 megapixel camera on the back that can also record 720p video at 30 fps. Only the iPhone 4 has a LED flash though, which is a shame. I don't really care for the flash as a photography tool, as the sensor is weak anyway, but I'm used to using my phone as a flashlight. Then again, the Super AMOLED display with a blank white screen at full brightness might do the work just as well if not even better. Sample videos are available for the Galaxy S and the iPhone 4. It seems to me that the iPhone 4 achieves better results. Aditionally both phones also have a front facing VGA (640x480) camera.

Construction. The Galaxy S at 64.2 mm x 122.4 mm x 9.9 mm, the iPhone 4 at 58.6 mm x 115.2 mm x 9.3 mm. So the iPhone 4 is smaller in every dimension, but it weighs 137 g, while the Galaxy S weighs only 119 g. In terms of build quality the iPhone 4 seems to be way ahead with its steel frame and strong glass back and front. The Galaxy S is basic plastic that bends in every direction.

It is important to keep in mind that the hardware is only part of the story, we also need to look at the software. The iPhone 4 comes with iOS 4 and based on Apples current track record, I predict it will be updated up to a stripped down version of iOS 7. The Galaxy S comes with Android 2.1 (with its proprietary TouchWiz skin on top of it) and it will most likely see a 2.2 update in 2010. Based on Samsungs history however, it's not looking good after that. If the phone is successful then it might also see a Gingerbread update but I wouldn't bet on it. Now before any of you start commenting that I can "root it", yes I know it's possible to do custom updates on both phones via hacks, but as they are not supported or even allowed by the manufacturers, I'm not going to put those options down as features.

Now I'm not going to compare iOS and Android, as I don't have first hand experience with either of them and there are plenty of comparisons out there already.

However, lets talk media support. First up, audio. The iPhone 4 can play the following formats: MP3, WAV, AAC, HE-AAC, Audible, Apple Lossless, AIFF. The Galaxy S can play: MP3, WAV, AAC, HE-AAC, HE-AAC v2, FLAC, OGG, WMA, AC3, AMR-NB, AMR-WB, MID, IMY, XMF. Now some people might be jumping up and screaming "OGG!", but I don't really care for it myself. The interesting formats in the Galaxy S list to myself are FLAC and AC3. Next, video. The iPhone 4 supports H.264 (up to 720p@30fps, Main Profile 3.1) with AAC-LC (up to 160 kbps, 48KHz, stereo) packeged into .m4v, .mp4 or .mov. The Galaxy S supports DivX, Xvid, VC-1, Sorenson, H.263, H.264 (up to 720p@30fps, High Profile 5.1), in the following containers: MP4, MKV, AVI, WMV, 3GP, FLV. So technically the Galaxy S is definitely a much better media player.

One of the real big features of the iPhone 4 that I like is the App Store. I can purchase a lot of different applications and currently it has by far the biggest developer base. On the other hand the Galaxy S unfortunately doesn't have anything like this. It does have the Android Market but that is limited to very specific countries like the USA, so it's quite useless to me. I can't buy any applications from there nor can I sell any. There are also some other application stores for android but they are extremely limited in their reach, effectively making them useless aswell. So in terms of 3rd party applications for the phone there really is no comparsion. The iPhone 4 has the App Store and the Galaxy S doesn't have anything.

To wrap it all up, if I should indeed decide to buy a smartphone it will definetly be the iPhone 4. Even when it might not be the best hardware-wise, it's the software that counts and the Galaxy S doesn't have any to speak of.